ChatGPT 5: Are we Closer to AGI?

Introduction

The release of ChatGPT 5 marks a watershed moment in the evolution of large language models. With over 700 million weekly users and integration into products like Microsoft Copilot, GPT-5 has been touted as “a significant step” toward artificial general intelligence (AGI) (Milmo, 2025). Yet debates persist on whether its enhancements represent true strides toward a system capable of human-level reasoning across any domain or simply incremental advances on narrow tasks. This post examines the journey from early GPT iterations to GPT-5, considers how AGI is defined, and explores how specialized AI hardware—led by startups such as Etched with its Sohu ASIC—could accelerate or constrain progress toward that elusive goal.

The Evolution of GPT Models

Since the original GPT launch in 2018, OpenAI’s models have grown in scale and capability. GPT-1 demonstrated unsupervised pretraining on a general text corpus, GPT-2 expanded parameters to 1.5 billion, and GPT-3 exploded to 175 billion parameters, showcasing zero-shot and few-shot learning abilities. GPT-3.5 refined chat interactions, and GPT-4 introduced multimodal inputs. GPT-4.o and GPT-4.5 added “chain-of-thought” reasoning, while GPT-5 unifies these lines into a single model that claims to integrate reasoning, “vibe coding,” and agentic functions without requiring manual mode selection (Zeff, 2025).

Defining Artificial General Intelligence

AGI refers to a system that can understand, learn, and apply knowledge across any intellectual task that a human can perform. Key attributes include autonomous continuous learning, broad domain transfer, and goal-driven reasoning. OpenAI’s own definition frames AGI as “a highly autonomous system that outperforms humans at most economically valuable work” (Milmo, 2025). Critics emphasize continuous self-improvement and real-world adaptability—traits still missing from GPT-5, which requires retraining to acquire new skills rather than online learning (Griffiths & Varanasi, 2025).

Capabilities and Limitations of ChatGPT 5

Reasoning and Multimodality

GPT-5 demonstrates improved chain-of-thought reasoning, surpassing GPT-4’s benchmarks in tasks such as mathematics, logic puzzles, and abstraction. It processes text, voice, and images in a unified pipeline, enabling applications like on-the-fly document analysis and voice-guided tutoring (Strickland, 2025).

Vibe Coding

A standout feature, “vibe coding,” allows users to describe desired software in natural language and receive complete, compilable code within seconds. On the SWE-bench coding benchmark, GPT-5 achieved a 74.9% first-attempt success rate, edging out Anthropic’s Claude Opus 4.1 (74.5%) and Google DeepMind’s Gemini 2.5 Pro (59.6%) (Zeff, 2025).

Agentic Tasks

GPT-5 autonomously selects and orchestrates external tools—calendars, email, or APIs—to fulfill complex requests. This “agentic AI” paradigm signals movement beyond static chat, illustrating a new class of assistants capable of executing multi-step workflows (Zeff, 2025).

Limitations

Despite these advances, GPT-5 is not yet AGI. It lacks continuous learning in deployment, requiring offline retraining for new knowledge. Hallucination rates, though reduced to 1.6% on the HealthBench Hard Hallucinations test, still impede reliability in high-stakes domains (Zeff, 2025). Ethical and safety guardrails have improved via “safe completions,” but adversarial jailbreaks remain a concern (Strickland, 2025).

According to Matt O’Brien of AP News (O’Brien, 2025), GPT-5 resets OpenAI’s flagship technology architecture, preparing the ground for future innovations. Yet Sam Altman admitted that key AGI traits, notably online self-learning, are still “many things quite important” away (Milmo, 2025).

Strategic Moves in the AI Hardware Landscape

AI models of GPT-5’s scale demand unprecedented compute power. Traditional GPUs from Nvidia remain dominant, but the market is rapidly diversifying with startups offering specialized accelerators. Graphcore and Cerebras target general-purpose AI workloads, while niche players are betting on transformer-only ASICs. This shift toward specialization reflects the increasing costs of training and inference at scale (Medium, 2024).

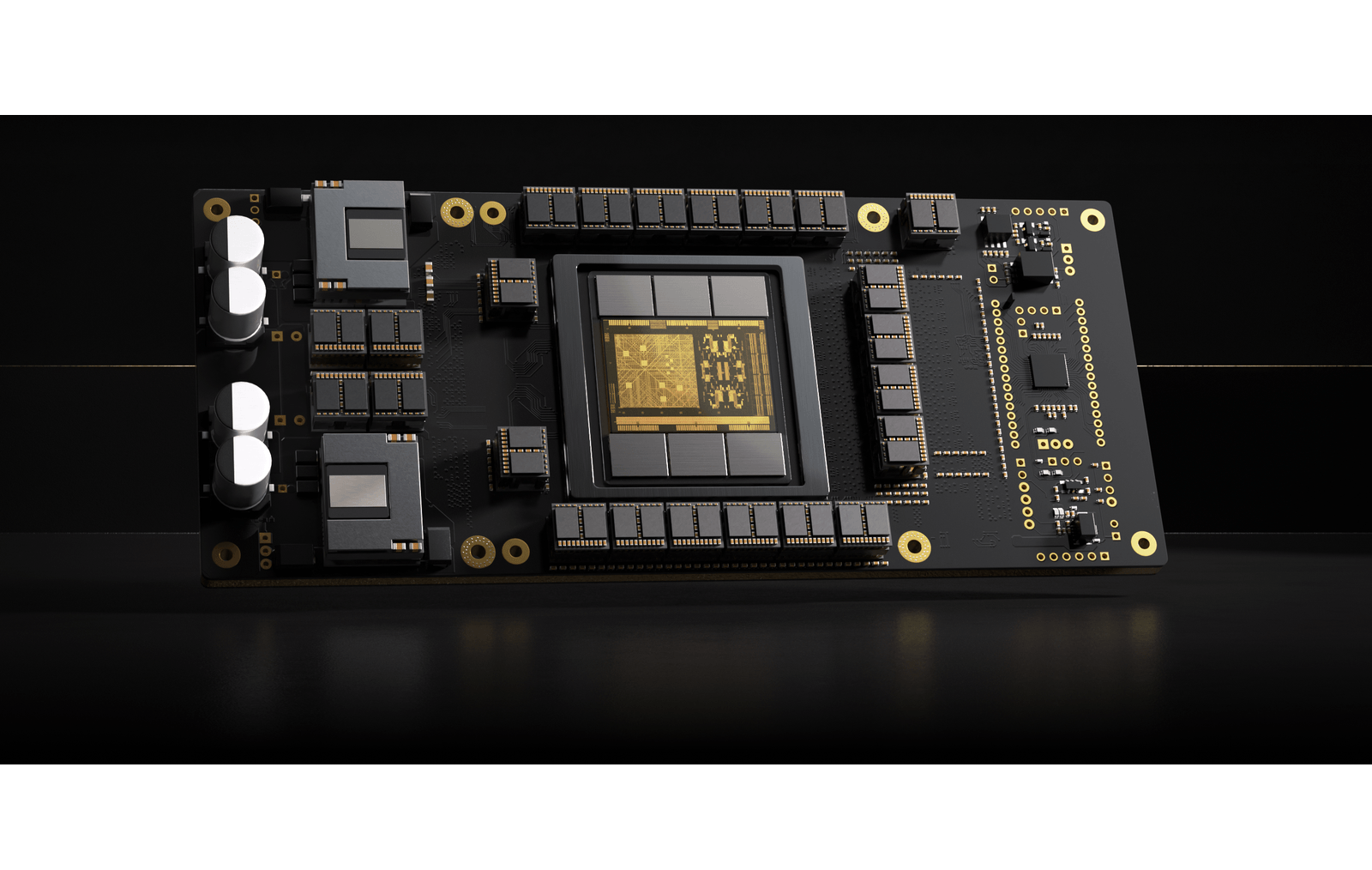

Recently, BitsWithBrains (Editorial team, 2024) reported that Etched.ai’s Sohu chip promises 20× faster inference than Nvidia H100 GPUs by hard-wiring transformer matrix multiplications, achieving 90% FLOP utilization versus 30–40% on general-purpose hardware.

Etched and the Sohu ASIC

Genesis and Funding

Founded in 2022, Etched secured \$120 million to develop Sohu, its transformer-specific ASIC (Wassim, 2024). This investment reflects confidence in a hyper-specialized strategy aimed at reducing AI infrastructure costs and energy consumption.

Technical Superiority

Sohu integrates 144 GB of HBM3 memory per chip, enabling large batch sizes without performance degradation—critical for services like ChatGPT and Google Gemini that handle thousands of concurrent requests (Wassim, 2024). An 8× Sohu server is claimed to replace 160 Nvidia H100 GPUs, shrinking hardware footprint and operational overhead.

Strategic Partnerships and Demonstrations

Etched partnered with TSMC to leverage its 4 nm process and dual-sourced HBM3E memory, ensuring production scalability and reliability (Wassim, 2024). The company showcased “Oasis,” a real-time interactive video generator built in collaboration with Decart, demonstrating a use case only economically feasible on Sohu hardware (Lyons, 2024). This three-step strategy—invent, demonstrate feasibility, and launch ASIC—exemplifies how Etched is creating demand for its specialized chip.

Market Potential and Risks

While Sohu’s efficiency is compelling, its transformer-only focus raises concerns about adaptability if AI architectures evolve beyond transformers. Early access programs and developer cloud services aim to onboard customers in sectors like streaming, gaming, and metaverse applications, but the technology remains unproven at hyperscale (Lyons, 2024).

Implications for AGI

Hardware acceleration reduces latency and cost barriers, enabling more frequent experimentation and real-time multimodal inference. If transformer-specialized chips like Sohu deliver on their promises, the accelerated feedback loops could hasten algorithmic breakthroughs. Yet AGI requires more than raw compute—it demands architectures capable of lifelong learning, causal reasoning, and autonomous goal formulation, areas where current hardware alone cannot suffice.

Policy and regulation will also shape the trajectory. Continuous online learning raises new safety and accountability challenges, potentially requiring hardware-level enforcements of policy constraints (Griffiths & Varanasi, 2025).

Challenges and Ethical Considerations

Safety and Hallucinations

Despite reduced hallucination rates, GPT-5 may still propagate misinformation in critical sectors like healthcare and finance. Ongoing hiring of forensic psychiatrists to study mental health impacts highlights the gravity of uncontrolled outputs (Strickland, 2025).

Data Privacy

Agentic functionalities that access personal calendars or emails necessitate robust permission and encryption frameworks. Misconfigurations could expose sensitive data in automated workflows.

Regulatory Scrutiny

OpenAI faces legal challenges tied to its nonprofit origins and nonprofit-to-for-profit conversion, drawing oversight from state attorneys general. Specialized hardware firms may encounter export controls if their chips enable dual-use applications.

Environmental Impact

While Sohu claims energy efficiency gains, the overall environmental footprint of proliferating data centers and embedded AI systems remains substantial. Lifecycle analyses must account for chip manufacturing and e-waste.

Key Takeaways

- GPT-5 Advances: Improved reasoning, coding (“vibe coding”), and agentic tasks push the model closer to human-level versatility (Zeff, 2025).

- AGI Gap: True AGI demands continuous, autonomous learning—a feature GPT-5 still lacks (Milmo, 2025).

- Hardware Specialization: Startups like Etched with Sohu ASICs offer 20× performance for transformer models, but their narrow focus poses adaptability risks (Editorial team, 2024; Wassim, 2024).

- Strategic Demonstrations: Projects like Oasis illustrate how specialized hardware can create entirely new application markets (Lyons, 2024).

- Ethical and Regulatory Hurdles: Safety, privacy, and environmental considerations will influence the pace of AGI development (Strickland, 2025; Griffiths & Varanasi, 2025).

References

- Griffiths, B. D., & Varanasi, L. (2025, August 7). Here’s why Sam Altman says OpenAI’s GPT-5 falls short of AGI. Business Insider. https://www.businessinsider.com/sam-altman-openai-gpt-5-agi-2025-8

- Milmo, D. (2025, August 7). OpenAI says latest ChatGPT upgrade is big step forward but still can’t do humans’ jobs. The Guardian. https://www.theguardian.com/technology/2025/aug/07/openai-chatgpt-upgrade-big-step-forward-human-jobs-gpt-5

- O’Brien, M. (2025, August 7). OpenAI launches GPT-5, a potential barometer for whether AI hype is justified. AP News. https://apnews.com/article/d12cd2d6310a2515042067b5d3965aa1

- Strickland, E. (2025). OpenAI Launches GPT-5, the Next Step in Its Quest for AGI. IEEE Spectrum. https://spectrum.ieee.org/openai-gpt-5-agi

- Zeff, M. (2025, August 7). OpenAI’s GPT-5 is here. TechCrunch. https://techcrunch.com/2025/08/07/openais-gpt-5-is-here/

- BitsWithBrains Editorial Team. (2024, June 30). The AI Chip Race Heats Up Yet Again: Etched’s Transformer Gambit. https://www.bitswithbrains.com/news/the-ai-chip-race-heats-up-yet-again%3A-etched%27s-transformer-gambit

- Wassim. (2024, July 2). Etched Sohu: Revolutionizing AI with Transformer-Specific Chips. Medium. https://medium.com/@maxel333/etched-sohu-revolutionizing-ai-with-transformer-specific-chips-4a8661394f49

- Lyons, A. (2024, November 2). Etched’s Oasis: Creating a Market For Sohu. Chipstrat. https://www.chipstrat.com/p/etcheds-oasis-creating-a-market-for

Related Content

- Great Scientists Series

- Careers in Quantum Computing: Charting the Future

- John von Neumann: The Smartest Man Who Ever Lived

- The Development of GPT-3

- IBM Watson's Jeopardy Win: Showcasing AI Power

- Steve Jobs: Visionary Innovator of Technology

- Tesla: The Electrifying Genius

- Perplexity AI: A Game-Changing Tool

- Understanding Artificial General Intelligence (AGI)

- Self-Learning AI in Video Games

- Teen Entrepreneurship Tools

- Tesla's FSD System: Paving the Way for Autonomous Driving

- The First AI Art: The Next Rembrandt

- AI in Space Exploration: Pivotal Role of AI Systems

- The Birth of Chatbots: Revolutionizing Customer Service

- Alexa: Revolutionizing Home Automation

- Google's DeepMind Health Projects

- Smarter Than Einstein Podcast

- The Creation of Siri: Pioneering a New Era of Virtual Assistants

- Deep Blue Beats Kasparov: The Dawn of AI in Chess

- The Invention of Neural Networks

- Great Scientists Series

- Careers in Quantum Computing: Charting the Future

- John von Neumann: The Smartest Man Who Ever Lived

- The Development of GPT-3

- IBM Watson's Jeopardy Win: Showcasing AI Power

- Steve Jobs: Visionary Innovator of Technology

- Tesla: The Electrifying Genius

- Perplexity AI: A Game-Changing Tool

- Understanding Artificial General Intelligence (AGI)

- Self-Learning AI in Video Games

- Teen Entrepreneurship Tools

- Tesla's FSD System: Paving the Way for Autonomous Driving

- The First AI Art: The Next Rembrandt

- AI in Space Exploration: Pivotal Role of AI Systems

- The Birth of Chatbots: Revolutionizing Customer Service

- Alexa: Revolutionizing Home Automation

- Google's DeepMind Health Projects

- Smarter Than Einstein Podcast

- The Creation of Siri: Pioneering a New Era of Virtual Assistants

- Deep Blue Beats Kasparov: The Dawn of AI in Chess

- The Invention of Neural Networks

Stay Connected

Newsletter

Sign up for the Lexicon Labs Newsletter to receive updates on book releases, promotions, and giveaways.

Sign up for the Lexicon Labs Newsletter to receive updates on book releases, promotions, and giveaways.

Catalog of Titles

Our list of titles is updated regularly. View our full Catalog of Titles

Our list of titles is updated regularly. View our full Catalog of Titles

.jpg)